Deep learning is defined as a subset of ML (Machine Learning) that attempts to work like the human brain. It is the digital technology where several artificial neural networks- algorithms modeled to mimic the human brain—learn from exponential data present around.

Although cannot match the exact ability of the human brain, Deep Learning allows systems to collect data in clusters and make predictions with superb accuracy.

Deep Learning facilitates various AI (Artificial intelligence) applications, AI services & solutions, and enhances automation capability without any human intervention, it incredibly performs physical and analytical tasks with the highest accuracy.

For example, digital products & services like digital chatbots/virtual assistants, credit card fraud detection systems, voice-controlled TV remotes, and emerging technologies like self-driving cars, all are seamlessly backed by Deep Learning capabilities.

Deep Learning technology is essentially a neural network that has three or more layers. These neural networks simulate human brain behavior and try to learn from large amounts of data extracted.

In neural networks (with three or even more layers), a single layer can still make predictions that are approximately correct while additional layers help to optimize as well as refine for accuracy.

Deep Learning – How Does it Work?

Several layers of neural networks are a set of algorithms that mimic human brains driving Deep Learning technology. Deep Learning is powered by these algorithms that are modeled like human brains, or the way they (human brains) work! What configures the neural network? Training with enormous data configures the neurons present in the very network.

This allows the consequent Deep Learning model to adequately train to process new data. Deep Learning models accept data/information from varied data sources and then analyze them in real-time without any human intervention.

Deep Learning allows optimization of GPUs (Graphics Processing Units) for training models and prepares them to process multiple computations at a time/simultaneously.

Many AI applications are backed by Deep Learning to improve automation tasks and various analytical tasks. When you browse the internet, when you use mobile phones and other AI-ML-enabled electronic devices, you automatically interact with Deep Learning technology.

Other myriad AI-ML-Deep Learning applications include generating captions for YouTube videos, voice commands, speech recognition on smart speakers/smartphones, self-driving cars, facial recognition, and so on.

Deep Delve into Deep Learning Neural Networks

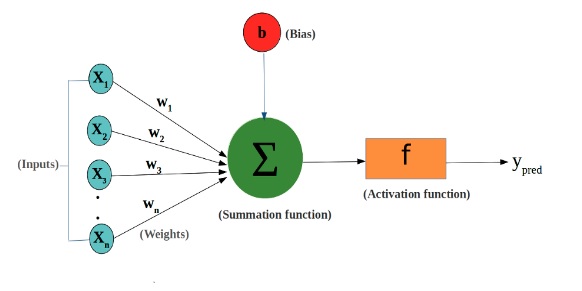

Also called Artificial Neural Networks, Deep Learning Neural networks emulate the human brain through a fine combination of Data Inputs (X), Weights (W), and Bias (B) – the learnable parameters within neural networks.

These elements (X, W, B) collaborate to work together towards accurately recognizing, classifying, and describing objects within the present data.

Simplest Types

- Forward Propagation

- Backward Propagation

Deep Learning Neural Networks are composed of several layers of nodes that are interconnected, and each of them is built upon the previous layer to optimize and refine the categorization or the predictions. This is how the computations progress through the network and is called Forward Propagation.

The two layers of the Deep Neural Network, input, and output layers, are called Visible Layers. In the input layer, the Deep Learning model ingests data to process while in the output layer, the final classification or the final prediction is made.

Backward Propagation is another method or process that uses gradient descent kind of algorithms, for calculating errors in predictions, and then adjusting the function’s weights & biases by moving backward through the network layers, in an attempt to train the very model.

Both the processes, Forward Propagation and Backward Propagation make it possible for a neural network to make predictions, to correct errors if any. Gradually the Deep Learning algorithm adjusts, fits itself, and becomes more efficient and accurate over time.

Complex Types

- CNNs (Convolutional Neural Networks)

- RNNs (Recurrent Neural Networks)

Deep Learning Algorithms are very complex in character. Forward Progression & Backward Progression are the simple types of Deep Learning algorithms but CNN’s & RNNs are the complex types that address specific datasets or problems.

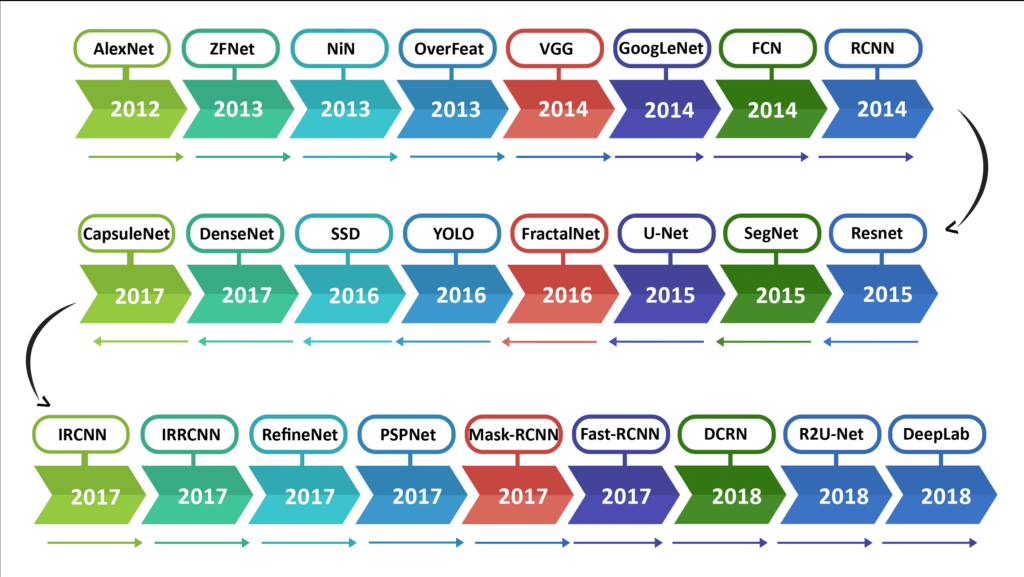

CNNs (Convolutional Neural Networks) are primarily used in computer vision, and applications related to image classification and can detect varied patterns, and features within an image, thereby enabling tasks such as object detection, object classification, object recognition, and so on.

RNNs (Recurrent Neural Networks) are primarily used for NLP (Natural Language Processing) applications and speech recognition applications. RNN leverages sequential data or time-series data.

Deep Learning Evolution – A Summary

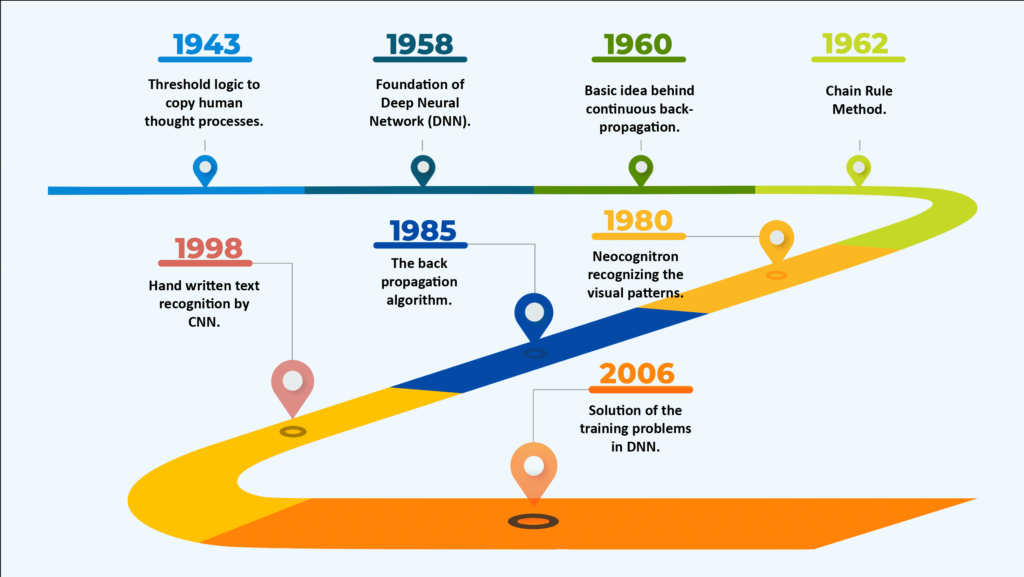

The Deep Learning evolutionary journey started with the creation of a specific computer model in 1943. Warren McCulloch and Walter Pitts developed a computer model that was based on the neural networks of the human brain. They used ‘threshold logic’, a fine combination of specific algorithms, and mathematics, to mimic, to copy the thought process.

From that day onward, Deep Learning has continued to evolve except for two major breaks in its development during the infamous AI (Artificial Intelligence) winters, somewhere between 1974 -1980, and 1987-1993.

Note- Artificial Winters refers to a period when AI funding and commercial research dries up. It is a quiet period for AI-related activities/funding/research, development, etc. Whereas, Artificial Summers refers to a period seeing AI innovation and investments peak, and become active.

In The 1960s

In 1960, Henry J. Kelley developed the basics of a continuous Back Propagation Model. Then, in 1962 Stuart Dreyfus developed a simpler version that was based on the chain rule. Alexey Grigoryevich Ivakhnenko developed the Group method of data handling while Valentin Grigorʹevich Lapa wrote Cybernetics & Forecasting Techniques, and they were the ones who made the earliest efforts in developing deep learning algorithms in the year 1965.

In The 1970s

The first Artificial Intelligence (AI) winter occurred during the 1970s. It hugely impacted Deep Learning research (and the whole AI). However, few individuals continued AI-ML Deep Learning research without external help/funding. Kunihiko Fukushima was the first to use CNNs (Convolutional Neural Networks). He designed neural networks with various pooling & convolutional layers.

And then in 1979, he developed ANN (Artificial Neural Network) which was termed Neocognitron and it used a multilayered design, on a hierarchical pattern. This design allowed computer system to learn and recognize visual patterns.

Though invented in 1960 by Henry J.Kelley, Back Propagation Model significantly evolved in 1970. It was made possible by Seppo Linnainmaa when he wrote his master thesis and a FORTRAN code for Back Propagation.

However, this concept was ultimately applied to neural networks only in 1985 when Williams, Hinton, and Rumelhart demonstrated this DL model (Back Propagation DL Model) in a neural network that could provide some interesting distribution representations.

In The 1980s & 1990s

Yann LeCun was the first to provide any practical demonstration of the Back Propagation Model at Bell Labs in the year 1989. Then the second Artificial Intelligence winter kicked in during this tenure, i.e. during 1985-90s. This hurt DL research and neural networks.

It was during this period that the situations pushed AI to a pseudoscience status. Then it bounced back in 1995 with the development of SVM (Support Vector Machine) and in 1997, LSTM (Long Short-Term Memory) was developed for recurrent neural networks. In 1999, GPUs (Graphics Processing Units) were developed.

From 2000-2010

The Vanishing Gradient Problem appeared somewhere around the year 2000. It was exposed that those lessons or features that formed in lower layers were not being taken/learned by upper layers as well. Learning signals could not reach the upper layers, so this gap existed.

However, it was also found that this problem was not meant for all neural networks, just those with gradient-based learning models. In the year 2001, Gartner (then META Group) revealed a research report explaining data growth opportunities and challenges as three-dimensional.

This also led to the onset of Big Data during that period. In 2009, Professor Fei-Fei Li at Stanford launched ImageNet. He assembled one free database that consisted of over 14 million labeled images. These labeled mages were required to train neural nets.

2011-2020

The speed and efficiency of GPUs had significantly increased by 2011. This enabled them to train CNNs without pre-training on the layer-by-layer pattern. The very increased speed made Deep Learning create a significant impact in the ecosystem, such as the creation of AlexNet.

ALexNet was a CNN whose architecture won many international technology awards in 2011 & 2012. Rectified Linear Units helped in enhancing speed & dropout. Then in 2012, Google Brain released The CAT Experiment. It explored the challenges of ‘unsupervised learning’. And Deep Learning uses ‘Supervised Learning’. Many experiments and projects followed during this tenure.

In 2014, GAN (Generative Adversarial Neural Network) was introduced by Ian Good fellow in the DL segment. Using GAN, two NN (Neural Networks) can play against each other in a game. GAN facilitates the perfection of a product.

Importance Of Deep Learning

Deep Learning facilitates maximum accuracy and superiority in terms of data handling and management. Technology companies worldwide are increasingly investing in AI-ML Deep Learning technology as it allows maximum trust via supreme accuracy. This further leads to better decision-making abilities across industries. Deep Learning technology makes machines smarter.

For instance, Google ALphaGO defeated Lee Sedol, one of the world’s legendary professional Go players, and it had become the news headline.

Google Search engine immensely uses Deep Learning technology, and in other applications like speech recognition systems, self-driving cars, drones, etc. the very digital technology is impacting across industries with immense digital capabilities leading to garnering maximum business returns.